You need to be able to test an application in the same way that a user would use it... and despite the apparent disadvantages compared with unit testing, integration testing is a necessary part of a balanced QA plan for maintaining product robustness.

Sure, unit tests are great for checking the individual components of a system, but what if your system relies on many different components working together in unison? In cases where unit tests just won't see the big picture, that’s where integration testing comes in. When you're dealing with web apps, this may include a cross-browser examination of user-facing functionality for usages like site navigation, pressing buttons, filling in forms, and so on. Selenium is an excellent solution for handling this, and one that's worked particularly well for us.

At HubSpot we maintain a continuous deployment environment in which new features are pushed to production all the time. Automated browser-based regression testing with Selenium and the WebDriver API helps to ensure that our code changes don't break the builds. Multiple programming languages are supported for use with Selenium, so once the framework was installed, we chose Python for writing our tests in.

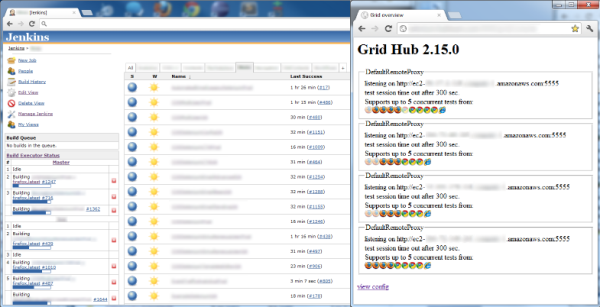

Once the tests get written and committed to GitHub, our continuous integration server (Jenkins) picks up the tests and runs them at regular intervals. In order to run the tests, Jenkins accesses the Selenium Grid Hub, which manages our available web browsers that live on Amazon EC2 instances in the cloud. If there are any errors at runtime, it becomes really easy to track down problems because screenshots get saved along with error output. Jenkins then sends us email notifications so that we can be alerted of any issues right away.

(Above/left - Generic example of Jenkins in action. You can see which jobs are building and their status.)

(Above/right - Generic example of Grid Hub in action. You can see the status of machines and which browsers are running Selenium tests.)

Here, we have both a QA and a Production development environment. As new features get pushed to QA to be tested before reaching Production, a post-deploy script instantly kicks off the associated Jenkins jobs so that we can determine if anything broke. This also becomes a good time to write new Selenium tests for features being added. If all tests pass (not just Selenium, but also all our unit tests, etc), then it’s safe to deploy code to Production.

One weakness of Selenium is that changes to the UI can break tests even if there’s no regression because Selenium performs checks based on the CSS selectors of the web page being tested. That’s why failures don’t necessarily mean something broke. To avoid these false positives, tests may need to be updated as changes to the application code occur. With good planning during test creation, you can write tests that are more adaptable to the visual changes in an app so that tests stay robust during code pushes. There may be a tradeoff between test quality and speed of test creation. Quality is generally the better path to take in the long run.

We use a MySQL DB to store a lot of the data that we gather from Selenium test runs so that we can run queries against tables later for figuring out patterns and other useful information. The tests also access the DB where needed. For example, let's say there's a test for checking that emails get sent out at the start of the next business day. Rather than having a test running for that long, the test stores the subject of the email in the DB and when the test runs again the next day it checks if an email with that subject was received by the given email address. In other cases where the wait time required is much smaller (e.g., 20 minutes) we can still use our delayed test system for keeping our servers available for other tests during the waiting period.

(Above - A quick look at some of the tools we use for testing.)

With all of its adaptability and functionality, Selenium has proven itself to be a powerful solution for integration testing. We also use several other tools and services for testing our applications, including Pingdom, Sentry, Nagios, and more (some internally made); not to mention all those unit tests we have everywhere.

Testing is a big deal, and that's no exception at HubSpot. It’s vital for us to keep our applications robust because we're not in the business of forcing our customers to be involuntary beta testers. With multiple apps contributing to HubSpot’s integrated marketing solution, there’s no shortage of testing to be done, and it’s one of the ways we try to give our customers the best user experience possible.

(Michael Mintz leads the Selenium Automation at HubSpot)